diff --git a/DCGAN-PyTorch/01-DCGAN-PL.ipynb b/DCGAN-PyTorch/01-DCGAN-PL.ipynb

new file mode 100644

index 0000000000000000000000000000000000000000..af0e5cc8d7ec3af234d193ce0c1ef3becf84bb2e

--- /dev/null

+++ b/DCGAN-PyTorch/01-DCGAN-PL.ipynb

@@ -0,0 +1,462 @@

+{

+ "cells": [

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "<img width=\"800px\" src=\"../fidle/img/00-Fidle-header-01.svg\"></img>\n",

+ "\n",

+ "# <!-- TITLE --> [SHEEP3] - A DCGAN to Draw a Sheep, with Pytorch Lightning\n",

+ "<!-- DESC --> Episode 1 : Draw me a sheep, revisited with a DCGAN, writing in Pytorch Lightning\n",

+ "<!-- AUTHOR : Jean-Luc Parouty (CNRS/SIMaP) -->\n",

+ "\n",

+ "## Objectives :\n",

+ " - Build and train a DCGAN model with the Quick Draw dataset\n",

+ " - Understanding DCGAN\n",

+ "\n",

+ "The [Quick draw dataset](https://quickdraw.withgoogle.com/data) contains about 50.000.000 drawings, made by real people... \n",

+ "We are using a subset of 117.555 of Sheep drawings \n",

+ "To get the dataset : [https://github.com/googlecreativelab/quickdraw-dataset](https://github.com/googlecreativelab/quickdraw-dataset) \n",

+ "Datasets in numpy bitmap file : [https://console.cloud.google.com/storage/quickdraw_dataset/full/numpy_bitmap](https://console.cloud.google.com/storage/quickdraw_dataset/full/numpy_bitmap) \n",

+ "Sheep dataset : [https://storage.googleapis.com/quickdraw_dataset/full/numpy_bitmap/sheep.npy](https://storage.googleapis.com/quickdraw_dataset/full/numpy_bitmap/sheep.npy) (94.3 Mo)\n",

+ "\n",

+ "\n",

+ "## What we're going to do :\n",

+ "\n",

+ " - Have a look to the dataset\n",

+ " - Defining a GAN model\n",

+ " - Build the model\n",

+ " - Train it\n",

+ " - Have a look of the results"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## Step 1 - Init and parameters\n",

+ "#### Python init"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "tags": []

+ },

+ "outputs": [],

+ "source": [

+ "import os\n",

+ "import sys\n",

+ "import shutil\n",

+ "\n",

+ "import numpy as np\n",

+ "import torch\n",

+ "import torch.nn as nn\n",

+ "import torch.nn.functional as F\n",

+ "import torchvision\n",

+ "import torchvision.transforms as transforms\n",

+ "from lightning import LightningDataModule, LightningModule, Trainer\n",

+ "from lightning.pytorch.callbacks.progress.tqdm_progress import TQDMProgressBar\n",

+ "from lightning.pytorch.callbacks.progress.base import ProgressBarBase\n",

+ "from lightning.pytorch.callbacks import ModelCheckpoint\n",

+ "from lightning.pytorch.loggers.tensorboard import TensorBoardLogger\n",

+ "\n",

+ "from tqdm import tqdm\n",

+ "from torch.utils.data import DataLoader\n",

+ "\n",

+ "import fidle\n",

+ "\n",

+ "from modules.SmartProgressBar import SmartProgressBar\n",

+ "from modules.QuickDrawDataModule import QuickDrawDataModule\n",

+ "\n",

+ "from modules.GAN import GAN\n",

+ "from modules.WGANGP import WGANGP\n",

+ "from modules.Generators import *\n",

+ "from modules.Discriminators import *\n",

+ "\n",

+ "# Init Fidle environment\n",

+ "run_id, run_dir, datasets_dir = fidle.init('SHEEP3')"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "#### Few parameters"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "tags": []

+ },

+ "outputs": [],

+ "source": [

+ "latent_dim = 128\n",

+ "\n",

+ "gan_class = 'WGANGP'\n",

+ "generator_class = 'Generator_2'\n",

+ "discriminator_class = 'Discriminator_3' \n",

+ " \n",

+ "scale = 0.001\n",

+ "epochs = 3\n",

+ "lr = 0.0001\n",

+ "b1 = 0.5\n",

+ "b2 = 0.999\n",

+ "batch_size = 32\n",

+ "num_img = 48\n",

+ "fit_verbosity = 2\n",

+ " \n",

+ "dataset_file = datasets_dir+'/QuickDraw/origine/sheep.npy' \n",

+ "data_shape = (28,28,1)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "#### Cleaning"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# You can comment these lines to keep each run...\n",

+ "shutil.rmtree(f'{run_dir}/figs', ignore_errors=True)\n",

+ "shutil.rmtree(f'{run_dir}/models', ignore_errors=True)\n",

+ "shutil.rmtree(f'{run_dir}/tb_logs', ignore_errors=True)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## Step 2 - Get some nice data"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "#### Get a Nice DataModule\n",

+ "Our DataModule is defined in [./modules/QuickDrawDataModule.py](./modules/QuickDrawDataModule.py) \n",

+ "This is a [LightningDataModule](https://pytorch-lightning.readthedocs.io/en/stable/data/datamodule.html)"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "tags": []

+ },

+ "outputs": [],

+ "source": [

+ "dm = QuickDrawDataModule(dataset_file, scale, batch_size, num_workers=8)\n",

+ "dm.setup()"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "#### Have a look"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "dl = dm.train_dataloader()\n",

+ "batch_data = next(iter(dl))\n",

+ "\n",

+ "fidle.scrawler.images( batch_data.reshape(-1,28,28), indices=range(batch_size), columns=12, x_size=1, y_size=1, \n",

+ " y_padding=0,spines_alpha=0, save_as='01-Sheeps')"

+ ]

+ },

+ {

+ "attachments": {},

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## Step 3 - Get a nice GAN model\n",

+ "\n",

+ "Our Generators are defined in [./modules/Generators.py](./modules/Generators.py) \n",

+ "Our Discriminators are defined in [./modules/Discriminators.py](./modules/Discriminators.py) \n",

+ "\n",

+ "\n",

+ "Our GAN is defined in [./modules/GAN.py](./modules/GAN.py) \n",

+ "\n",

+ "#### Class loader"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "def get_class(class_name):\n",

+ " module=sys.modules['__main__']\n",

+ " class_ = getattr(module, class_name)\n",

+ " return class_\n",

+ " \n",

+ "def get_instance(class_name, **args):\n",

+ " module=sys.modules['__main__']\n",

+ " class_ = getattr(module, class_name)\n",

+ " instance_ = class_(**args)\n",

+ " return instance_"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "#### Basic test - Just to be sure it (could) works... ;-)"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "tags": []

+ },

+ "outputs": [],

+ "source": [

+ "# ---- A little piece of black magic to instantiate a class from its name\n",

+ "#\n",

+ "def get_classByName(class_name, **args):\n",

+ " module=sys.modules['__main__']\n",

+ " class_ = getattr(module, class_name)\n",

+ " instance_ = class_(**args)\n",

+ " return instance_\n",

+ "\n",

+ "# ----Get it, and play with them\n",

+ "#\n",

+ "print('\\nInstantiation :\\n')\n",

+ "\n",

+ "Generator_ = get_class(generator_class)\n",

+ "Discriminator_ = get_class(discriminator_class)\n",

+ "\n",

+ "generator = Generator_( latent_dim=latent_dim, data_shape=data_shape)\n",

+ "discriminator = Discriminator_( latent_dim=latent_dim, data_shape=data_shape)\n",

+ "\n",

+ "print('\\nFew tests :\\n')\n",

+ "z = torch.randn(batch_size, latent_dim)\n",

+ "print('z size : ',z.size())\n",

+ "\n",

+ "fake_img = generator.forward(z)\n",

+ "print('fake_img : ', fake_img.size())\n",

+ "\n",

+ "p = discriminator.forward(fake_img)\n",

+ "print('pred fake : ', p.size())\n",

+ "\n",

+ "print('batch_data : ',batch_data.size())\n",

+ "\n",

+ "p = discriminator.forward(batch_data)\n",

+ "print('pred real : ', p.size())\n",

+ "\n",

+ "nimg = fake_img.detach().numpy()\n",

+ "fidle.scrawler.images( nimg.reshape(-1,28,28), indices=range(batch_size), columns=12, x_size=1, y_size=1, \n",

+ " y_padding=0,spines_alpha=0, save_as='01-Sheeps')"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "\n",

+ "print(fake_img.size())\n",

+ "print(batch_data.size())\n",

+ "e = torch.distributions.uniform.Uniform(0, 1).sample([32,1])\n",

+ "e = e[:None,None,None]\n",

+ "i = fake_img * e + (1-e)*batch_data\n",

+ "\n",

+ "\n",

+ "nimg = i.detach().numpy()\n",

+ "fidle.scrawler.images( nimg.reshape(-1,28,28), indices=range(batch_size), columns=12, x_size=1, y_size=1, \n",

+ " y_padding=0,spines_alpha=0, save_as='01-Sheeps')\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "#### GAN model\n",

+ "To simplify our code, the GAN class is defined separately in the module [./modules/GAN.py](./modules/GAN.py) \n",

+ "Passing the classe names for generator/discriminator by parameter allows to stay modular and to use the PL checkpoints."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "GAN_ = get_class(gan_class)\n",

+ "\n",

+ "gan = GAN_( data_shape = data_shape,\n",

+ " lr = lr,\n",

+ " b1 = b1,\n",

+ " b2 = b2,\n",

+ " batch_size = batch_size, \n",

+ " latent_dim = latent_dim, \n",

+ " generator_class = generator_class, \n",

+ " discriminator_class = discriminator_class)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## Step 5 - Train it !\n",

+ "#### Instantiate Callbacks, Logger & co.\n",

+ "More about :\n",

+ "- [Checkpoints](https://pytorch-lightning.readthedocs.io/en/stable/common/checkpointing_basic.html)\n",

+ "- [modelCheckpoint](https://pytorch-lightning.readthedocs.io/en/stable/api/pytorch_lightning.callbacks.ModelCheckpoint.html#pytorch_lightning.callbacks.ModelCheckpoint)"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "tags": []

+ },

+ "outputs": [],

+ "source": [

+ "\n",

+ "# ---- for tensorboard logs\n",

+ "#\n",

+ "logger = TensorBoardLogger( save_dir = f'{run_dir}',\n",

+ " name = 'tb_logs' )\n",

+ "\n",

+ "log_dir = os.path.abspath(f'{run_dir}/tb_logs')\n",

+ "print('To access the logs with tensorboard, use this command line :')\n",

+ "print(f'tensorboard --logdir {log_dir}')\n",

+ "\n",

+ "# ---- To save checkpoints\n",

+ "#\n",

+ "callback_checkpoints = ModelCheckpoint( dirpath = f'{run_dir}/models', \n",

+ " filename = 'bestModel', \n",

+ " save_top_k = 1, \n",

+ " save_last = True,\n",

+ " every_n_epochs = 1, \n",

+ " monitor = \"g_loss\")\n",

+ "\n",

+ "# ---- To have a nive progress bar\n",

+ "#\n",

+ "callback_progressBar = SmartProgressBar(verbosity=2) # Usable evertywhere\n",

+ "# progress_bar = TQDMProgressBar(refresh_rate=1) # Usable in real jupyter lab (bug in vscode)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "#### Train it"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "tags": []

+ },

+ "outputs": [],

+ "source": [

+ "\n",

+ "trainer = Trainer(\n",

+ " accelerator = \"auto\",\n",

+ " max_epochs = epochs,\n",

+ " callbacks = [callback_progressBar, callback_checkpoints],\n",

+ " log_every_n_steps = batch_size,\n",

+ " logger = logger\n",

+ ")\n",

+ "\n",

+ "trainer.fit(gan, dm)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## Step 6 - Reload our best model\n",

+ "Note : "

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "gan = WGANGP.load_from_checkpoint('./run/SHEEP3/models/bestModel.ckpt')"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "nb_images = 96\n",

+ "\n",

+ "z = torch.randn(nb_images, latent_dim)\n",

+ "print('z size : ',z.size())\n",

+ "\n",

+ "fake_img = gan.generator.forward(z)\n",

+ "print('fake_img : ', fake_img.size())\n",

+ "\n",

+ "nimg = fake_img.detach().numpy()\n",

+ "fidle.scrawler.images( nimg.reshape(-1,28,28), indices=range(nb_images), columns=12, x_size=1, y_size=1, \n",

+ " y_padding=0,spines_alpha=0, save_as='01-Sheeps')"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "fidle.end()"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "---\n",

+ "<img width=\"80px\" src=\"../fidle/img/00-Fidle-logo-01.svg\"></img>"

+ ]

+ }

+ ],

+ "metadata": {

+ "kernelspec": {

+ "display_name": "fidle-env",

+ "language": "python",

+ "name": "python3"

+ },

+ "language_info": {

+ "codemirror_mode": {

+ "name": "ipython",

+ "version": 3

+ },

+ "file_extension": ".py",

+ "mimetype": "text/x-python",

+ "name": "python",

+ "nbconvert_exporter": "python",

+ "pygments_lexer": "ipython3",

+ "version": "3.9.2"

+ }

+ },

+ "nbformat": 4,

+ "nbformat_minor": 4

+}

diff --git a/DCGAN-PyTorch/modules/Discriminators.py b/DCGAN-PyTorch/modules/Discriminators.py

new file mode 100644

index 0000000000000000000000000000000000000000..bdbaa79c08332bfcdd6c6a6e8ad3a4cee62f02e9

--- /dev/null

+++ b/DCGAN-PyTorch/modules/Discriminators.py

@@ -0,0 +1,136 @@

+# ------------------------------------------------------------------

+# _____ _ _ _

+# | ___(_) __| | | ___

+# | |_ | |/ _` | |/ _ \

+# | _| | | (_| | | __/

+# |_| |_|\__,_|_|\___| GAN / Generators

+# ------------------------------------------------------------------

+# Formation Introduction au Deep Learning (FIDLE)

+# CNRS/SARI/DEVLOG MIAI/EFELIA 2023 - https://fidle.cnrs.fr

+# ------------------------------------------------------------------

+# by JL Parouty (feb 2023) - PyTorch Lightning example

+

+import numpy as np

+import torch.nn as nn

+

+class Discriminator_1(nn.Module):

+ '''

+ A basic DNN discriminator, usable with classic GAN

+ '''

+

+ def __init__(self, latent_dim=None, data_shape=None):

+

+ super().__init__()

+ self.img_shape = data_shape

+ print('init discriminator 1 : ',data_shape,' to sigmoid')

+

+ self.model = nn.Sequential(

+

+ nn.Flatten(),

+ nn.Linear(int(np.prod(data_shape)), 512),

+ nn.ReLU(),

+

+ nn.Linear(512, 256),

+ nn.ReLU(),

+

+ nn.Linear(256, 1),

+ nn.Sigmoid(),

+ )

+

+ def forward(self, img):

+ validity = self.model(img)

+

+ return validity

+

+

+

+

+class Discriminator_2(nn.Module):

+ '''

+ A more efficient discriminator,based on CNN, usable with classic GAN

+ '''

+

+ def __init__(self, latent_dim=None, data_shape=None):

+

+ super().__init__()

+ self.img_shape = data_shape

+ print('init discriminator 2 : ',data_shape,' to sigmoid')

+

+ self.model = nn.Sequential(

+

+ nn.Conv2d(1, 32, kernel_size = 3, stride = 2, padding = 1),

+ nn.ReLU(),

+ nn.BatchNorm2d(32),

+ nn.Dropout2d(0.25),

+

+ nn.Conv2d(32, 64, kernel_size = 3, stride = 1, padding = 1),

+ nn.ReLU(),

+ nn.BatchNorm2d(64),

+ nn.Dropout2d(0.25),

+

+ nn.Conv2d(64, 128, kernel_size = 3, stride = 1, padding = 1),

+ nn.ReLU(),

+ nn.BatchNorm2d(128),

+ nn.Dropout2d(0.25),

+

+ nn.Conv2d(128, 256, kernel_size = 3, stride = 2, padding = 1),

+ nn.ReLU(),

+ nn.BatchNorm2d(256),

+ nn.Dropout2d(0.25),

+

+ nn.Flatten(),

+ nn.Linear(12544, 1),

+ nn.Sigmoid(),

+ )

+

+ def forward(self, img):

+ img_nchw = img.permute(0, 3, 1, 2) # reformat from NHWC to NCHW

+ validity = self.model(img_nchw)

+

+ return validity

+

+

+

+class Discriminator_3(nn.Module):

+ '''

+ A CNN discriminator, usable with a WGANGP.

+ This discriminator has no sigmoid and returns a critical and not a probability

+ '''

+

+ def __init__(self, latent_dim=None, data_shape=None):

+

+ super().__init__()

+ self.img_shape = data_shape

+ print('init discriminator 2 : ',data_shape,' to sigmoid')

+

+ self.model = nn.Sequential(

+

+ nn.Conv2d(1, 32, kernel_size = 3, stride = 2, padding = 1),

+ nn.ReLU(),

+ nn.BatchNorm2d(32),

+ nn.Dropout2d(0.25),

+

+ nn.Conv2d(32, 64, kernel_size = 3, stride = 1, padding = 1),

+ nn.ReLU(),

+ nn.BatchNorm2d(64),

+ nn.Dropout2d(0.25),

+

+ nn.Conv2d(64, 128, kernel_size = 3, stride = 1, padding = 1),

+ nn.ReLU(),

+ nn.BatchNorm2d(128),

+ nn.Dropout2d(0.25),

+

+ nn.Conv2d(128, 256, kernel_size = 3, stride = 2, padding = 1),

+ nn.ReLU(),

+ nn.BatchNorm2d(256),

+ nn.Dropout2d(0.25),

+

+ nn.Flatten(),

+ nn.Linear(12544, 1),

+ )

+

+ def forward(self, img):

+ img_nchw = img.permute(0, 3, 1, 2) # reformat from NHWC to NCHW

+ validity = self.model(img_nchw)

+

+ return validity

\ No newline at end of file

diff --git a/DCGAN-PyTorch/modules/GAN.py b/DCGAN-PyTorch/modules/GAN.py

new file mode 100644

index 0000000000000000000000000000000000000000..cf5a5697f5e259178411706c52decdef6f176eea

--- /dev/null

+++ b/DCGAN-PyTorch/modules/GAN.py

@@ -0,0 +1,182 @@

+

+# ------------------------------------------------------------------

+# _____ _ _ _

+# | ___(_) __| | | ___

+# | |_ | |/ _` | |/ _ \

+# | _| | | (_| | | __/

+# |_| |_|\__,_|_|\___| GAN / GAN LigthningModule

+# ------------------------------------------------------------------

+# Formation Introduction au Deep Learning (FIDLE)

+# CNRS/SARI/DEVLOG MIAI/EFELIA 2023 - https://fidle.cnrs.fr

+# ------------------------------------------------------------------

+# by JL Parouty (feb 2023) - PyTorch Lightning example

+

+

+import sys

+import numpy as np

+import torch

+import torch.nn.functional as F

+import torchvision

+from lightning import LightningModule

+

+

+class GAN(LightningModule):

+

+ # -------------------------------------------------------------------------

+ # Init

+ # -------------------------------------------------------------------------

+ #

+ def __init__(

+ self,

+ data_shape = (None,None,None),

+ latent_dim = None,

+ lr = 0.0002,

+ b1 = 0.5,

+ b2 = 0.999,

+ batch_size = 64,

+ generator_class = None,

+ discriminator_class = None,

+ **kwargs,

+ ):

+ super().__init__()

+

+ print('\n---- GAN initialization --------------------------------------------')

+

+ # ---- Hyperparameters

+ #

+ # Enable Lightning to store all the provided arguments under the self.hparams attribute.

+ # These hyperparameters will also be stored within the model checkpoint.

+ #

+ self.save_hyperparameters()

+

+ print('Hyperarameters are :')

+ for name,value in self.hparams.items():

+ print(f'{name:24s} : {value}')

+

+ # ---- Generator/Discriminator instantiation

+ #

+ # self.generator = Generator(latent_dim=self.hparams.latent_dim, img_shape=data_shape)

+ # self.discriminator = Discriminator(img_shape=data_shape)

+

+ print('Submodels :')

+ module=sys.modules['__main__']

+ class_g = getattr(module, generator_class)

+ class_d = getattr(module, discriminator_class)

+ self.generator = class_g( latent_dim=latent_dim, data_shape=data_shape)

+ self.discriminator = class_d( latent_dim=latent_dim, data_shape=data_shape)

+

+ # ---- Validation and example data

+ #

+ self.validation_z = torch.randn(8, self.hparams.latent_dim)

+ self.example_input_array = torch.zeros(2, self.hparams.latent_dim)

+

+

+ def forward(self, z):

+ return self.generator(z)

+

+

+ def adversarial_loss(self, y_hat, y):

+ return F.binary_cross_entropy(y_hat, y)

+

+

+ def training_step(self, batch, batch_idx, optimizer_idx):

+ imgs = batch

+ batch_size = batch.size(0)

+

+ # ---- Get some latent space vectors

+ # We use type_as() to make sure we initialize z on the right device (GPU/CPU).

+ #

+ z = torch.randn(batch_size, self.hparams.latent_dim)

+ z = z.type_as(imgs)

+

+ # ---- Train generator

+ # Generator use optimizer #0

+ # We try to generate false images that could mislead the discriminator

+ #

+ if optimizer_idx == 0:

+

+ # Generate fake images

+ self.fake_imgs = self.generator.forward(z)

+

+ # Assemble labels that say all images are real, yes it's a lie ;-)

+ # put on GPU because we created this tensor inside training_loop

+ misleading_labels = torch.ones(batch_size, 1)

+ misleading_labels = misleading_labels.type_as(imgs)

+

+ # Adversarial loss is binary cross-entropy

+ g_loss = self.adversarial_loss(self.discriminator.forward(self.fake_imgs), misleading_labels)

+ self.log("g_loss", g_loss, prog_bar=True)

+ return g_loss

+

+ # ---- Train discriminator

+ # Discriminator use optimizer #1

+ # We try to make the difference between fake images and real ones

+ #

+ if optimizer_idx == 1:

+

+ # These images are reals

+ real_labels = torch.ones(batch_size, 1)

+ real_labels = real_labels.type_as(imgs)

+ pred_labels = self.discriminator.forward(imgs)

+

+ real_loss = self.adversarial_loss(pred_labels, real_labels)

+

+ # These images are fake

+ fake_imgs = self.generator.forward(z)

+ fake_labels = torch.zeros(batch_size, 1)

+ fake_labels = fake_labels.type_as(imgs)

+

+ fake_loss = self.adversarial_loss(self.discriminator(fake_imgs.detach()), fake_labels)

+

+ # Discriminator loss is the average

+ d_loss = (real_loss + fake_loss) / 2

+ self.log("d_loss", d_loss, prog_bar=True)

+ return d_loss

+

+

+ def configure_optimizers(self):

+

+ lr = self.hparams.lr

+ b1 = self.hparams.b1

+ b2 = self.hparams.b2

+

+ # With a GAN, we need 2 separate optimizer.

+ # opt_g to optimize the generator #0

+ # opt_d to optimize the discriminator #1

+ # opt_g = torch.optim.Adam(self.generator.parameters(), lr=lr, betas=(b1, b2))

+ # opt_d = torch.optim.Adam(self.discriminator.parameters(), lr=lr, betas=(b1, b2),)

+ opt_g = torch.optim.Adam(self.generator.parameters(), lr=lr)

+ opt_d = torch.optim.Adam(self.discriminator.parameters(), lr=lr)

+ return [opt_g, opt_d], []

+

+

+ def training_epoch_end(self, outputs):

+

+ # Get our validation latent vectors as z

+ # z = self.validation_z.type_as(self.generator.model[0].weight)

+

+ # ---- Log Graph

+ #

+ if(self.current_epoch==1):

+ sampleImg=torch.rand((1,28,28,1))

+ sampleImg=sampleImg.type_as(self.generator.model[0].weight)

+ self.logger.experiment.add_graph(self.discriminator,sampleImg)

+

+ # ---- Log d_loss/epoch

+ #

+ g_loss, d_loss = 0,0

+ for metrics in outputs:

+ g_loss+=float( metrics[0]['loss'] )

+ d_loss+=float( metrics[1]['loss'] )

+ g_loss, d_loss = g_loss/len(outputs), d_loss/len(outputs)

+ self.logger.experiment.add_scalar("g_loss/epochs",g_loss, self.current_epoch)

+ self.logger.experiment.add_scalar("d_loss/epochs",d_loss, self.current_epoch)

+

+ # ---- Log some of these images

+ #

+ z = torch.randn(self.hparams.batch_size, self.hparams.latent_dim)

+ z = z.type_as(self.generator.model[0].weight)

+ sample_imgs = self.generator(z)

+ sample_imgs = sample_imgs.permute(0, 3, 1, 2) # from NHWC to NCHW

+ grid = torchvision.utils.make_grid(tensor=sample_imgs, nrow=12, )

+ self.logger.experiment.add_image(f"Generated images", grid,self.current_epoch)

diff --git a/DCGAN-PyTorch/modules/Generators.py b/DCGAN-PyTorch/modules/Generators.py

new file mode 100644

index 0000000000000000000000000000000000000000..9b104d579469f51dfda08b1332c9b100b6fddaa4

--- /dev/null

+++ b/DCGAN-PyTorch/modules/Generators.py

@@ -0,0 +1,94 @@

+

+# ------------------------------------------------------------------

+# _____ _ _ _

+# | ___(_) __| | | ___

+# | |_ | |/ _` | |/ _ \

+# | _| | | (_| | | __/

+# |_| |_|\__,_|_|\___| GAN / Generators

+# ------------------------------------------------------------------

+# Formation Introduction au Deep Learning (FIDLE)

+# CNRS/SARI/DEVLOG MIAI/EFELIA 2023 - https://fidle.cnrs.fr

+# ------------------------------------------------------------------

+# by JL Parouty (feb 2023) - PyTorch Lightning example

+

+

+import numpy as np

+import torch.nn as nn

+

+

+class Generator_1(nn.Module):

+

+ def __init__(self, latent_dim=None, data_shape=None):

+ super().__init__()

+ self.latent_dim = latent_dim

+ self.img_shape = data_shape

+ print('init generator 1 : ',latent_dim,' to ',data_shape)

+

+ self.model = nn.Sequential(

+

+ nn.Linear(latent_dim, 128),

+ nn.ReLU(),

+

+ nn.Linear(128,256),

+ nn.BatchNorm1d(256, 0.8),

+ nn.ReLU(),

+

+ nn.Linear(256, 512),

+ nn.BatchNorm1d(512, 0.8),

+ nn.ReLU(),

+

+ nn.Linear(512, 1024),

+ nn.BatchNorm1d(1024, 0.8),

+ nn.ReLU(),

+

+ nn.Linear(1024, int(np.prod(data_shape))),

+ nn.Sigmoid()

+

+ )

+

+

+ def forward(self, z):

+ img = self.model(z)

+ img = img.view(img.size(0), *self.img_shape)

+ return img

+

+

+

+class Generator_2(nn.Module):

+

+ def __init__(self, latent_dim=None, data_shape=None):

+ super().__init__()

+ self.latent_dim = latent_dim

+ self.img_shape = data_shape

+ print('init generator 2 : ',latent_dim,' to ',data_shape)

+

+ self.model = nn.Sequential(

+

+ nn.Linear(latent_dim, 7*7*64),

+ nn.Unflatten(1, (64,7,7)),

+

+ # nn.UpsamplingNearest2d( scale_factor=2 ),

+ nn.UpsamplingBilinear2d( scale_factor=2 ),

+ nn.Conv2d( 64,128, (3,3), stride=(1,1), padding=(1,1) ),

+ nn.ReLU(),

+ nn.BatchNorm2d(128),

+

+ # nn.UpsamplingNearest2d( scale_factor=2 ),

+ nn.UpsamplingBilinear2d( scale_factor=2 ),

+ nn.Conv2d( 128,256, (3,3), stride=(1,1), padding=(1,1)),

+ nn.ReLU(),

+ nn.BatchNorm2d(256),

+

+ nn.Conv2d( 256,1, (5,5), stride=(1,1), padding=(2,2)),

+ nn.Sigmoid()

+

+ )

+

+ def forward(self, z):

+ img_nchw = self.model(z)

+ img_nhwc = img_nchw.permute(0, 2, 3, 1) # reformat from NCHW to NHWC

+ # img = img.view(img.size(0), *self.img_shape) # reformat from NCHW to NHWC

+ return img_nhwc

+

+

+

diff --git a/DCGAN-PyTorch/modules/QuickDrawDataModule.py b/DCGAN-PyTorch/modules/QuickDrawDataModule.py

new file mode 100644

index 0000000000000000000000000000000000000000..34a4ecfba7e5d123a833e5a6e58d14e4d4903d53

--- /dev/null

+++ b/DCGAN-PyTorch/modules/QuickDrawDataModule.py

@@ -0,0 +1,71 @@

+

+# ------------------------------------------------------------------

+# _____ _ _ _

+# | ___(_) __| | | ___

+# | |_ | |/ _` | |/ _ \

+# | _| | | (_| | | __/

+# |_| |_|\__,_|_|\___| GAN / QuickDrawDataModule

+# ------------------------------------------------------------------

+# Formation Introduction au Deep Learning (FIDLE)

+# CNRS/SARI/DEVLOG MIAI/EFELIA 2023 - https://fidle.cnrs.fr

+# ------------------------------------------------------------------

+# by JL Parouty (feb 2023) - PyTorch Lightning example

+

+

+import numpy as np

+import torch

+from lightning import LightningDataModule

+from torch.utils.data import DataLoader

+

+

+class QuickDrawDataModule(LightningDataModule):

+

+

+ def __init__( self, dataset_file='./sheep.npy', scale=1., batch_size=64, num_workers=4 ):

+

+ super().__init__()

+

+ print('\n---- QuickDrawDataModule initialization ----------------------------')

+ print(f'with : scale={scale} batch size={batch_size}')

+

+ self.scale = scale

+ self.dataset_file = dataset_file

+ self.batch_size = batch_size

+ self.num_workers = num_workers

+

+ self.dims = (28, 28, 1)

+ self.num_classes = 10

+

+

+

+ def prepare_data(self):

+ pass

+

+

+ def setup(self, stage=None):

+ print('\nDataModule Setup :')

+ # Load dataset

+ # Called at the beginning of each stage (train,val,test)

+ # Here, whatever the stage value, we'll have only one set.

+ data = np.load(self.dataset_file)

+ print('Original dataset shape : ',data.shape)

+

+ # Rescale

+ n=int(self.scale*len(data))

+ data = data[:n]

+ print('Rescaled dataset shape : ',data.shape)

+

+ # Normalize, reshape and shuffle

+ data = data/255

+ data = data.reshape(-1,28,28,1)

+ data = torch.from_numpy(data).float()

+ print('Final dataset shape : ',data.shape)

+

+ print('Dataset loaded and ready.')

+ self.data_train = data

+

+

+ def train_dataloader(self):

+ # Note : Numpy ndarray is Dataset compliant

+ # Have map-style interface. See https://pytorch.org/docs/stable/data.html

+ return DataLoader( self.data_train, batch_size=self.batch_size, num_workers=self.num_workers )

\ No newline at end of file

diff --git a/DCGAN-PyTorch/modules/SmartProgressBar.py b/DCGAN-PyTorch/modules/SmartProgressBar.py

new file mode 100644

index 0000000000000000000000000000000000000000..3ebe192d0d9732da08125fa0503b2b6f7a59cf02

--- /dev/null

+++ b/DCGAN-PyTorch/modules/SmartProgressBar.py

@@ -0,0 +1,70 @@

+

+# ------------------------------------------------------------------

+# _____ _ _ _

+# | ___(_) __| | | ___

+# | |_ | |/ _` | |/ _ \

+# | _| | | (_| | | __/

+# |_| |_|\__,_|_|\___| GAN / SmartProgressBar

+# ------------------------------------------------------------------

+# Formation Introduction au Deep Learning (FIDLE)

+# CNRS/SARI/DEVLOG MIAI/EFELIA 2023 - https://fidle.cnrs.fr

+# ------------------------------------------------------------------

+# by JL Parouty (feb 2023) - PyTorch Lightning example

+

+from lightning.pytorch.callbacks.progress.base import ProgressBarBase

+from tqdm import tqdm

+import sys

+

+class SmartProgressBar(ProgressBarBase):

+

+ def __init__(self, verbosity=2):

+ super().__init__()

+ self.verbosity = verbosity

+

+ def disable(self):

+ self.enable = False

+

+

+ def setup(self, trainer, pl_module, stage):

+ super().setup(trainer, pl_module, stage)

+ self.stage = stage

+

+

+ def on_train_epoch_start(self, trainer, pl_module):

+ super().on_train_epoch_start(trainer, pl_module)

+ if not self.enable : return

+

+ if self.verbosity==2:

+ self.progress=tqdm( total=trainer.num_training_batches,

+ desc=f'{self.stage} {trainer.current_epoch+1}/{trainer.max_epochs}',

+ ncols=100, ascii= " >",

+ bar_format='{l_bar}{bar}| [{elapsed}] {postfix}')

+

+

+

+ def on_train_epoch_end(self, trainer, pl_module):

+ super().on_train_epoch_end(trainer, pl_module)

+

+ if not self.enable : return

+

+ if self.verbosity==2:

+ self.progress.close()

+

+ if self.verbosity==1:

+ print(f'Train {trainer.current_epoch+1}/{trainer.max_epochs} Done.')

+

+

+ def on_train_batch_end(self, trainer, pl_module, outputs, batch, batch_idx):

+ super().on_train_batch_end(trainer, pl_module, outputs, batch, batch_idx)

+

+ if not self.enable : return

+

+ if self.verbosity==2:

+ metrics = {}

+ for name,value in trainer.logged_metrics.items():

+ metrics[name]=f'{float( trainer.logged_metrics[name] ):3.3f}'

+ self.progress.set_postfix(metrics)

+ self.progress.update(1)

+

+

+progress_bar = SmartProgressBar(verbosity=2)

diff --git a/DCGAN-PyTorch/modules/WGANGP.py b/DCGAN-PyTorch/modules/WGANGP.py

new file mode 100644

index 0000000000000000000000000000000000000000..030740b562d2bbac62f17fc671e530fc9383ed7d

--- /dev/null

+++ b/DCGAN-PyTorch/modules/WGANGP.py

@@ -0,0 +1,229 @@

+

+# ------------------------------------------------------------------

+# _____ _ _ _

+# | ___(_) __| | | ___

+# | |_ | |/ _` | |/ _ \

+# | _| | | (_| | | __/

+# |_| |_|\__,_|_|\___| GAN / GAN LigthningModule

+# ------------------------------------------------------------------

+# Formation Introduction au Deep Learning (FIDLE)

+# CNRS/SARI/DEVLOG MIAI/EFELIA 2023 - https://fidle.cnrs.fr

+# ------------------------------------------------------------------

+# by JL Parouty (feb 2023) - PyTorch Lightning example

+

+

+import sys

+import numpy as np

+import torch

+import torch.nn.functional as F

+import torchvision

+from lightning import LightningModule

+

+

+class WGANGP(LightningModule):

+

+ # -------------------------------------------------------------------------

+ # Init

+ # -------------------------------------------------------------------------

+ #

+ def __init__(

+ self,

+ data_shape = (None,None,None),

+ latent_dim = None,

+ lr = 0.0002,

+ b1 = 0.5,

+ b2 = 0.999,

+ batch_size = 64,

+ lambda_gp = 10,

+ generator_class = None,

+ discriminator_class = None,

+ **kwargs,

+ ):

+ super().__init__()

+

+ print('\n---- WGANGP initialization -----------------------------------------')

+

+ # ---- Hyperparameters

+ #

+ # Enable Lightning to store all the provided arguments under the self.hparams attribute.

+ # These hyperparameters will also be stored within the model checkpoint.

+ #

+ self.save_hyperparameters()

+

+ print('Hyperarameters are :')

+ for name,value in self.hparams.items():

+ print(f'{name:24s} : {value}')

+

+ # ---- Generator/Discriminator instantiation

+ #

+ # self.generator = Generator(latent_dim=self.hparams.latent_dim, img_shape=data_shape)

+ # self.discriminator = Discriminator(img_shape=data_shape)

+

+ print('Submodels :')

+ module=sys.modules['__main__']

+ class_g = getattr(module, generator_class)

+ class_d = getattr(module, discriminator_class)

+ self.generator = class_g( latent_dim=latent_dim, data_shape=data_shape)

+ self.discriminator = class_d( latent_dim=latent_dim, data_shape=data_shape)

+

+ # ---- Validation and example data

+ #

+ self.validation_z = torch.randn(8, self.hparams.latent_dim)

+ self.example_input_array = torch.zeros(2, self.hparams.latent_dim)

+

+

+ def forward(self, z):

+ return self.generator(z)

+

+

+ def adversarial_loss(self, y_hat, y):

+ return F.binary_cross_entropy(y_hat, y)

+

+

+

+# ------------------------------------------------------------------------------------ TO DO -------------------

+

+ # see : # from : https://github.com/rosshemsley/gander/blob/main/gander/models/gan.py

+

+ def gradient_penalty(self, real_images, fake_images):

+

+ batch_size = real_images.size(0)

+

+ # ---- Create interpolate images

+ #

+ # Get a random vector : size=([batch_size])

+ epsilon = torch.distributions.uniform.Uniform(0, 1).sample([batch_size])

+ # Add dimensions to match images batch : size=([batch_size,1,1,1])

+ epsilon = epsilon[:, None, None, None]

+ # Put epsilon a the right place

+ epsilon = epsilon.type_as(real_images)

+ # Do interpolation

+ interpolates = epsilon * fake_images + ((1 - epsilon) * real_images)

+

+ # ---- Use autograd to compute gradient

+ #

+ # The key to making this work is including `create_graph`, this means that the computations

+ # in this penalty will be added to the computation graph for the loss function, so that the

+ # second partial derivatives will be correctly computed.

+ #

+ interpolates.requires_grad = True

+

+ pred_labels = self.discriminator.forward(interpolates)

+

+ gradients = torch.autograd.grad( inputs = interpolates,

+ outputs = pred_labels,

+ grad_outputs = torch.ones_like(pred_labels),

+ create_graph = True,

+ only_inputs = True )[0]

+

+ grad_flat = gradients.view(batch_size, -1)

+ grad_norm = torch.linalg.norm(grad_flat, dim=1)

+

+ grad_penalty = (grad_norm - 1) ** 2

+

+ return grad_penalty

+

+

+

+# ------------------------------------------------------------------------------------------------------------------

+

+

+ def training_step(self, batch, batch_idx, optimizer_idx):

+

+ real_imgs = batch

+ batch_size = batch.size(0)

+ lambda_gp = self.hparams.lambda_gp

+

+ # ---- Get some latent space vectors and fake images

+ # We use type_as() to make sure we initialize z on the right device (GPU/CPU).

+ #

+ z = torch.randn(batch_size, self.hparams.latent_dim)

+ z = z.type_as(real_imgs)

+

+ fake_imgs = self.generator.forward(z)

+

+ # ---- Train generator

+ # Generator use optimizer #0

+ # We try to generate false images that could have nive critics

+ #

+ if optimizer_idx == 0:

+

+ # Get critics

+ critics = self.discriminator.forward(fake_imgs)

+

+ # Loss

+ g_loss = -critics.mean()

+

+ # Log

+ self.log("g_loss", g_loss, prog_bar=True)

+

+ return g_loss

+

+ # ---- Train discriminator

+ # Discriminator use optimizer #1

+ # We try to make the difference between fake images and real ones

+ #

+ if optimizer_idx == 1:

+

+ # Get critics

+ critics_real = self.discriminator.forward(real_imgs)

+ critics_fake = self.discriminator.forward(fake_imgs)

+

+ # Get gradient penalty

+ grad_penalty = self.gradient_penalty(real_imgs, fake_imgs)

+

+ # Loss

+ d_loss = critics_fake.mean() - critics_real.mean() + lambda_gp*grad_penalty.mean()

+

+ # Log loss

+ self.log("d_loss", d_loss, prog_bar=True)

+

+ return d_loss

+

+

+ def configure_optimizers(self):

+

+ lr = self.hparams.lr

+ b1 = self.hparams.b1

+ b2 = self.hparams.b2

+

+ # With a GAN, we need 2 separate optimizer.

+ # opt_g to optimize the generator #0

+ # opt_d to optimize the discriminator #1

+ # opt_g = torch.optim.Adam(self.generator.parameters(), lr=lr, betas=(b1, b2))

+ # opt_d = torch.optim.Adam(self.discriminator.parameters(), lr=lr, betas=(b1, b2),)

+ opt_g = torch.optim.Adam(self.generator.parameters(), lr=lr)

+ opt_d = torch.optim.Adam(self.discriminator.parameters(), lr=lr)

+ return [opt_g, opt_d], []

+

+

+ def training_epoch_end(self, outputs):

+

+ # Get our validation latent vectors as z

+ # z = self.validation_z.type_as(self.generator.model[0].weight)

+

+ # ---- Log Graph

+ #

+ if(self.current_epoch==1):

+ sampleImg=torch.rand((1,28,28,1))

+ sampleImg=sampleImg.type_as(self.generator.model[0].weight)

+ self.logger.experiment.add_graph(self.discriminator,sampleImg)

+

+ # ---- Log d_loss/epoch

+ #

+ g_loss, d_loss = 0,0

+ for metrics in outputs:

+ g_loss+=float( metrics[0]['loss'] )

+ d_loss+=float( metrics[1]['loss'] )

+ g_loss, d_loss = g_loss/len(outputs), d_loss/len(outputs)

+ self.logger.experiment.add_scalar("g_loss/epochs",g_loss, self.current_epoch)

+ self.logger.experiment.add_scalar("d_loss/epochs",d_loss, self.current_epoch)

+

+ # ---- Log some of these images

+ #

+ z = torch.randn(self.hparams.batch_size, self.hparams.latent_dim)

+ z = z.type_as(self.generator.model[0].weight)

+ sample_imgs = self.generator(z)

+ sample_imgs = sample_imgs.permute(0, 3, 1, 2) # from NHWC to NCHW

+ grid = torchvision.utils.make_grid(tensor=sample_imgs, nrow=12, )

+ self.logger.experiment.add_image(f"Generated images", grid,self.current_epoch)

diff --git a/DDPM/01-ddpm.ipynb b/DDPM/01-ddpm.ipynb

new file mode 100755

index 0000000000000000000000000000000000000000..06a7936777604f8d5d5cc8132fbed8ebc27df4eb

--- /dev/null

+++ b/DDPM/01-ddpm.ipynb

@@ -0,0 +1,820 @@

+{

+ "cells": [

+ {

+ "attachments": {},

+ "cell_type": "markdown",

+ "id": "756b572d",

+ "metadata": {},

+ "source": [

+ "<img width=\"800px\" src=\"../fidle/img/00-Fidle-header-01.svg\"></img>\n",

+ "\n",

+ "# <!-- TITLE --> [DDPM1] - Fashion MNIST Generation with DDPM\n",

+ "<!-- DESC --> Diffusion Model example, to generate Fashion MNIST images.\n",

+ "\n",

+ "<!-- AUTHOR : Hatim Bourfoune (CNRS/IDRIS), Maxime Song (CNRS/IDRIS) -->\n",

+ "\n",

+ "## Objectives :\n",

+ " - Understanding and implementing a **Diffusion Model** neurals network (DDPM)\n",

+ "\n",

+ "The calculation needs being important, it is preferable to use a very simple dataset such as MNIST to start with. \n",

+ "...MNIST with a small scale (need to adapt the code !) if you haven't a GPU ;-)\n",

+ "\n",

+ "\n",

+ "## Acknowledgements :\n",

+ "This notebook was heavily inspired by this [article](https://huggingface.co/blog/annotated-diffusion) and this [notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/examples/annotated_diffusion.ipynb#scrollTo=5153024b). "

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "54a15542",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "import math\n",

+ "from inspect import isfunction\n",

+ "from functools import partial\n",

+ "import random\n",

+ "import IPython\n",

+ "\n",

+ "import matplotlib.pyplot as plt\n",

+ "from tqdm.auto import tqdm\n",

+ "from einops import rearrange\n",

+ "\n",

+ "import torch\n",

+ "from torch import nn, einsum\n",

+ "import torch.nn.functional as F\n",

+ "from datasets import load_dataset, load_from_disk\n",

+ "\n",

+ "from torchvision import transforms\n",

+ "from torchvision.utils import make_grid\n",

+ "from torch.utils.data import DataLoader\n",

+ "import numpy as np\n",

+ "from PIL import Image\n",

+ "from torch.optim import Adam\n",

+ "\n",

+ "from torchvision.transforms import Compose, ToTensor, Lambda, ToPILImage, CenterCrop, Resize\n",

+ "import matplotlib.pyplot as plt"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "a854c28a",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "DEVICE = \"cuda\" if torch.cuda.is_available() else \"cpu\"\n",

+ "\n",

+ "# Reproductibility\n",

+ "torch.manual_seed(53)\n",

+ "random.seed(53)\n",

+ "np.random.seed(53)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "e33f10db",

+ "metadata": {},

+ "source": [

+ "## Create dataset\n",

+ "We will use the library HuggingFace Datasets to get our Fashion MNIST. If you are using Jean Zay, the dataset is already downloaded in the DSDIR, so you can use the code as it is. If you are not using Jean Zay, you should use the function load_dataset (commented) instead of load_from_disk. It will automatically download the dataset if it is not downloaded already."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "918c0138",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "dataset = load_dataset(\"fashion_mnist\") \n",

+ "dataset"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "cfe4d4f5",

+ "metadata": {},

+ "source": [

+ "As you can see the dataset is composed of two subparts: train and test. So the dataset is already split for us. We'll use the train part for now. <br/>\n",

+ "We can also see that the dataset as two features per sample: 'image' corresponding to the PIL version of the image and 'label' corresponding to the class of the image (shoe, shirt...). We can also see that there are 60 000 samples in our train dataset."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "2280400d",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "train_dataset = dataset['train']\n",

+ "train_dataset[0]"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "7978ad3d",

+ "metadata": {},

+ "source": [

+ "Each sample of a HuggingFace dataset is a dictionary containing the data."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "0d157e11",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "image = train_dataset[0]['image']\n",

+ "image"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "5dea3e5a",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "image_array = np.asarray(image, dtype=np.uint8)\n",

+ "print(f\"shape of the image: {image_array.shape}\")\n",

+ "print(f\"min: {image_array.min()}, max: {image_array.max()}\")"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "f86937e9",

+ "metadata": {},

+ "source": [

+ "We will now create a function that get the Fashion MNIST dataset needed, apply all the transformations we want on it and encapsulate that dataset in a dataloader."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "e646a7b1",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# load hugging face dataset from the DSDIR\n",

+ "def get_dataset(data_path, batch_size, test = False):\n",

+ " \n",

+ " dataset = load_from_disk(data_path)\n",

+ " # dataset = load_dataset(data_path) # Use this one if you're not on Jean Zay\n",

+ "\n",

+ " # define image transformations (e.g. using torchvision)\n",

+ " transform = Compose([\n",

+ " transforms.RandomHorizontalFlip(), # Data augmentation\n",

+ " transforms.ToTensor(), # Transform PIL image into tensor of value between [0,1]\n",

+ " transforms.Lambda(lambda t: (t * 2) - 1) # Normalize values between [-1,1]\n",

+ " ])\n",

+ "\n",

+ " # define function for HF dataset transform\n",

+ " def transforms_im(examples):\n",

+ " examples['pixel_values'] = [transform(image) for image in examples['image']]\n",

+ " del examples['image']\n",

+ " return examples\n",

+ "\n",

+ " dataset = dataset.with_transform(transforms_im).remove_columns('label') # We don't need it \n",

+ " channels, image_size, _ = dataset['train'][0]['pixel_values'].shape\n",

+ " \n",

+ " if test:\n",

+ " dataloader = DataLoader(dataset['test'], batch_size=batch_size)\n",

+ " else:\n",

+ " dataloader = DataLoader(dataset['train'], batch_size=batch_size, shuffle=True)\n",

+ "\n",

+ " len_dataloader = len(dataloader)\n",

+ " print(f\"channels: {channels}, image dimension: {image_size}, len_dataloader: {len_dataloader}\") \n",

+ " \n",

+ " return dataloader, channels, image_size, len_dataloader"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "413a3fea",

+ "metadata": {},

+ "source": [

+ "We choose the parameters and we instantiate the dataset:"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "918233da",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# Dataset parameters\n",

+ "batch_size = 64\n",

+ "data_path = \"/gpfsdswork/dataset/HuggingFace/fashion_mnist/fashion_mnist/\"\n",

+ "# data_path = \"fashion_mnist\" # If you're not using Jean Zay"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "85939f9d",

+ "metadata": {

+ "tags": []

+ },

+ "outputs": [],

+ "source": [

+ "train_dataloader, channels, image_size, len_dataloader = get_dataset(data_path, batch_size)\n",

+ "\n",

+ "batch_image = next(iter(train_dataloader))['pixel_values']\n",

+ "batch_image.shape"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "104db929",

+ "metadata": {},

+ "source": [

+ "We also create a function that allows us to see a batch of images:"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "196370c2",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "def normalize_im(images):\n",

+ " shape = images.shape\n",

+ " images = images.view(shape[0], -1)\n",

+ " images -= images.min(1, keepdim=True)[0]\n",

+ " images /= images.max(1, keepdim=True)[0]\n",

+ " return images.view(shape)\n",

+ "\n",

+ "def show_images(batch):\n",

+ " plt.imshow(torch.permute(make_grid(normalize_im(batch)), (1,2,0)))\n",

+ " plt.show()"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "96334e60",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "show_images(batch_image[:])"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "1befee67",

+ "metadata": {},

+ "source": [

+ "## Forward Diffusion\n",

+ "The aim of this part is to create a function that will add noise to any image at any step (following the DDPM diffusion process)."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "231629ad",

+ "metadata": {},

+ "source": [

+ "### Beta scheduling\n",

+ "First, we create a function that will compute every betas of every steps (following a specific shedule). We will only create a function for the linear schedule (original DDPM) and the cosine schedule (improved DDPM):"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "0039d38d",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# Different type of beta schedule\n",

+ "def linear_beta_schedule(timesteps, beta_start = 0.0001, beta_end = 0.02):\n",

+ " \"\"\"\n",

+ " linar schedule from the original DDPM paper https://arxiv.org/abs/2006.11239\n",

+ " \"\"\"\n",

+ " return torch.linspace(beta_start, beta_end, timesteps)\n",

+ "\n",

+ "\n",

+ "def cosine_beta_schedule(timesteps, s=0.008):\n",

+ " \"\"\"\n",

+ " cosine schedule as proposed in https://arxiv.org/abs/2102.09672\n",

+ " \"\"\"\n",

+ " steps = timesteps + 1\n",

+ " x = torch.linspace(0, timesteps, steps)\n",

+ " alphas_cumprod = torch.cos(((x / timesteps) + s) / (1 + s) * torch.pi * 0.5) ** 2\n",

+ " alphas_cumprod = alphas_cumprod / alphas_cumprod[0]\n",

+ " betas = 1 - (alphas_cumprod[1:] / alphas_cumprod[:-1])\n",

+ " return torch.clip(betas, 0.0001, 0.9999)\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "e18d1b38",

+ "metadata": {},

+ "source": [

+ "### Constants calculation\n",

+ "We will now create a function to calculate every constants we need for our Diffusion Model. <br/>\n",

+ "Constants:\n",

+ "- $ \\beta_t $: betas\n",

+ "- $ \\sqrt{\\frac{1}{\\alpha_t}} $: sqrt_recip_alphas\n",

+ "- $ \\sqrt{\\bar{\\alpha}_t} $: sqrt_alphas_cumprod\n",

+ "- $ \\sqrt{1-\\bar{\\alpha}_t} $: sqrt_one_minus_alphas_cumprod\n",

+ "- $ \\tilde{\\beta}_t = \\beta_t\\frac{1-\\bar{\\alpha}_{t-1}}{1-\\bar{\\alpha}_t} $: posterior_variance"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "84251513",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# Function to get alphas and betas\n",

+ "def get_alph_bet(timesteps, schedule=cosine_beta_schedule):\n",

+ " \n",

+ " # define beta\n",

+ " betas = schedule(timesteps)\n",

+ "\n",

+ " # define alphas \n",

+ " alphas = 1. - betas\n",

+ " alphas_cumprod = torch.cumprod(alphas, axis=0) # cumulative product of alpha\n",

+ " alphas_cumprod_prev = F.pad(alphas_cumprod[:-1], (1, 0), value=1.0) # corresponding to the prev const\n",

+ " sqrt_recip_alphas = torch.sqrt(1.0 / alphas)\n",

+ "\n",

+ " # calculations for diffusion q(x_t | x_{t-1}) and others\n",

+ " sqrt_alphas_cumprod = torch.sqrt(alphas_cumprod)\n",

+ " sqrt_one_minus_alphas_cumprod = torch.sqrt(1. - alphas_cumprod)\n",

+ "\n",

+ " # calculations for posterior q(x_{t-1} | x_t, x_0)\n",

+ " posterior_variance = betas * (1. - alphas_cumprod_prev) / (1. - alphas_cumprod)\n",

+ " \n",

+ " const_dict = {\n",

+ " 'betas': betas,\n",

+ " 'sqrt_recip_alphas': sqrt_recip_alphas,\n",

+ " 'sqrt_alphas_cumprod': sqrt_alphas_cumprod,\n",

+ " 'sqrt_one_minus_alphas_cumprod': sqrt_one_minus_alphas_cumprod,\n",

+ " 'posterior_variance': posterior_variance\n",

+ " }\n",

+ " \n",

+ " return const_dict"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "d5658d8e",

+ "metadata": {},

+ "source": [

+ "### Difference between Linear and Cosine schedule\n",

+ "We can check the differences between the constants when we change the parameters:"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "7bfdf98c",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "T = 1000\n",

+ "const_linear_dict = get_alph_bet(T, schedule=linear_beta_schedule)\n",

+ "const_cosine_dict = get_alph_bet(T, schedule=cosine_beta_schedule)\n",

+ "\n",

+ "plt.plot(np.arange(T), const_linear_dict['sqrt_alphas_cumprod'], color='r', label='linear')\n",

+ "plt.plot(np.arange(T), const_cosine_dict['sqrt_alphas_cumprod'], color='g', label='cosine')\n",

+ " \n",

+ "# Naming the x-axis, y-axis and the whole graph\n",

+ "plt.xlabel(\"Step\")\n",

+ "plt.ylabel(\"alpha_bar\")\n",

+ "plt.title(\"Linear and Cosine schedules\")\n",

+ " \n",

+ "# Adding legend, which helps us recognize the curve according to it's color\n",

+ "plt.legend()\n",

+ " \n",

+ "# To load the display window\n",

+ "plt.show()"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "b1537984",

+ "metadata": {},

+ "source": [

+ "### Definition of $ q(x_t|x_0) $"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "cb10e05b",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# extract the values needed for time t\n",

+ "def extract(constants, batch_t, x_shape):\n",

+ " diffusion_batch_size = batch_t.shape[0]\n",

+ " \n",

+ " # get a list of the appropriate constants of each timesteps\n",

+ " out = constants.gather(-1, batch_t.cpu()) \n",

+ " \n",

+ " return out.reshape(diffusion_batch_size, *((1,) * (len(x_shape) - 1))).to(batch_t.device)\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "2f5991bd",

+ "metadata": {},

+ "source": [

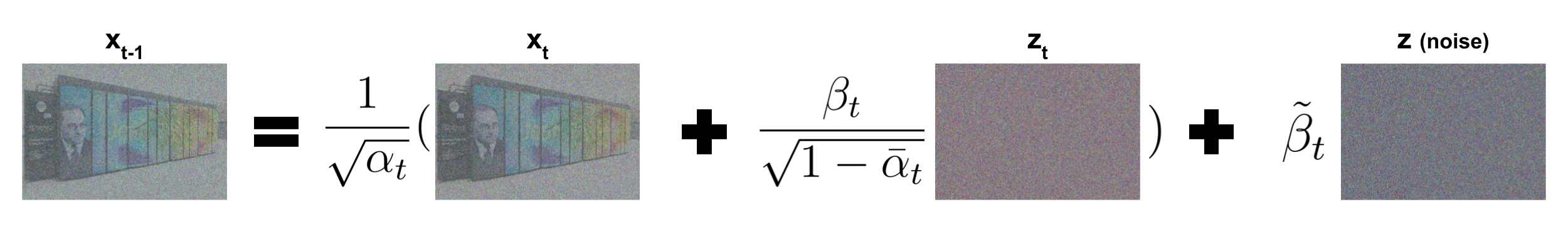

+ "Now that we have every constants that we need, we can create a function that will add noise to an image following the forward diffusion process. This function (q_sample) corresponds to $ q(x_t|x_0) $:\n",

+ "\n",

+ ""

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "28645450",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# forward diffusion (using the nice property)\n",

+ "def q_sample(constants_dict, batch_x0, batch_t, noise=None):\n",

+ " if noise is None:\n",

+ " noise = torch.randn_like(batch_x0)\n",

+ "\n",

+ " sqrt_alphas_cumprod_t = extract(constants_dict['sqrt_alphas_cumprod'], batch_t, batch_x0.shape)\n",

+ " sqrt_one_minus_alphas_cumprod_t = extract(\n",

+ " constants_dict['sqrt_one_minus_alphas_cumprod'], batch_t, batch_x0.shape\n",

+ " )\n",

+ "\n",

+ " return sqrt_alphas_cumprod_t * batch_x0 + sqrt_one_minus_alphas_cumprod_t * noise"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "dcc05f40",

+ "metadata": {},

+ "source": [

+ "We can now visualize how the forward diffusion process adds noise gradually the image according to its parameters:"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "7ed20740",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "T = 1000\n",

+ "const_linear_dict = get_alph_bet(T, schedule=linear_beta_schedule)\n",

+ "const_cosine_dict = get_alph_bet(T, schedule=cosine_beta_schedule)\n",

+ "\n",

+ "batch_t = torch.arange(batch_size)*(T//batch_size) # get a range of timesteps from 0 to T\n",

+ "print(f\"timesteps: {batch_t}\")\n",

+ "noisy_batch_linear = q_sample(const_linear_dict, batch_image, batch_t, noise=None)\n",

+ "noisy_batch_cosine = q_sample(const_cosine_dict, batch_image, batch_t, noise=None)\n",

+ "\n",

+ "print(\"Original images:\")\n",

+ "show_images(batch_image[:])\n",

+ "\n",

+ "print(\"Noised images with linear shedule:\")\n",

+ "show_images(noisy_batch_linear[:])\n",

+ "\n",

+ "print(\"Noised images with cosine shedule:\")\n",

+ "show_images(noisy_batch_cosine[:])"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "565d3c80",

+ "metadata": {},

+ "source": [

+ "## Reverse Diffusion Process"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "251808b0",

+ "metadata": {},

+ "source": [

+ "### Model definition\n",

+ "The reverse diffusion process is made by a deep learning model. We choosed a Unet model with attention. The model is optimized following some papers like [ConvNeXt](https://arxiv.org/pdf/2201.03545.pdf). You can inspect the model in the model.py file."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "29f00028",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "from model import Unet\n",

+ "\n",

+ "model = Unet( \n",

+ " dim=28,\n",

+ " init_dim=None,\n",

+ " out_dim=None,\n",

+ " dim_mults=(1, 2, 4),\n",

+ " channels=1,\n",

+ " with_time_emb=True,\n",

+ " convnext_mult=2,\n",

+ ")"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "0aaf936c",

+ "metadata": {},

+ "source": [

+ "### Definition of $ p_{\\theta}(x_{t-1}|x_t) $\n",

+ "Now we need a function to retrieve $x_{t-1}$ from $x_t$ and the predicted $z_t$. It corresponds to the reverse diffusion kernel:\n",

+ ""

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "00443d8e",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "@torch.no_grad()\n",

+ "def p_sample(constants_dict, batch_xt, predicted_noise, batch_t):\n",

+ " # We first get every constants needed and send them in right device\n",

+ " betas_t = extract(constants_dict['betas'], batch_t, batch_xt.shape).to(batch_xt.device)\n",

+ " sqrt_one_minus_alphas_cumprod_t = extract(\n",

+ " constants_dict['sqrt_one_minus_alphas_cumprod'], batch_t, batch_xt.shape\n",

+ " ).to(batch_xt.device)\n",

+ " sqrt_recip_alphas_t = extract(\n",

+ " constants_dict['sqrt_recip_alphas'], batch_t, batch_xt.shape\n",

+ " ).to(batch_xt.device)\n",

+ " \n",

+ " # Equation 11 in the ddpm paper\n",

+ " # Use predicted noise to predict the mean (mu theta)\n",

+ " model_mean = sqrt_recip_alphas_t * (\n",

+ " batch_xt - betas_t * predicted_noise / sqrt_one_minus_alphas_cumprod_t\n",

+ " )\n",

+ " \n",

+ " # We have to be careful to not add noise if we want to predict the final image\n",

+ " predicted_image = torch.zeros(batch_xt.shape).to(batch_xt.device)\n",

+ " t_zero_index = (batch_t == torch.zeros(batch_t.shape).to(batch_xt.device))\n",

+ " \n",

+ " # Algorithm 2 line 4, we add noise when timestep is not 1:\n",

+ " posterior_variance_t = extract(constants_dict['posterior_variance'], batch_t, batch_xt.shape)\n",

+ " noise = torch.randn_like(batch_xt) # create noise, same shape as batch_x\n",

+ " predicted_image[~t_zero_index] = model_mean[~t_zero_index] + (\n",

+ " torch.sqrt(posterior_variance_t[~t_zero_index]) * noise[~t_zero_index]\n",

+ " ) \n",

+ " \n",

+ " # If t=1 we don't add noise to mu\n",

+ " predicted_image[t_zero_index] = model_mean[t_zero_index]\n",

+ " \n",

+ " return predicted_image"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "c6e13aa1",

+ "metadata": {},

+ "source": [

+ "## Sampling "

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "459df8a2",

+ "metadata": {},

+ "source": [

+ "We will now create the sampling function. Given trained model, it should generate all the images we want."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "1e3cdf15",

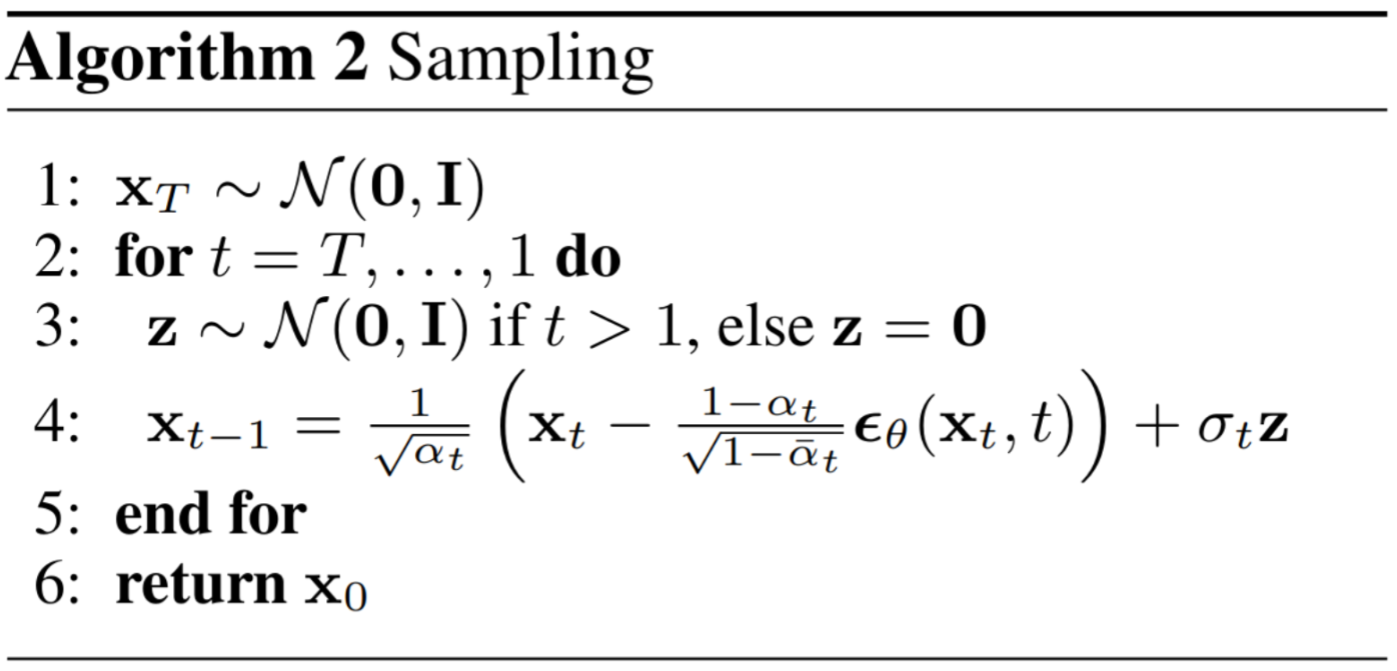

+ "metadata": {},

+ "source": [

+ "With the reverse diffusion process and a trained model, we can now make the sampling function corresponding to this algorithm:\n",

+ ""

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "710ef636",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# Algorithm 2 (including returning all images)\n",

+ "@torch.no_grad()\n",

+ "def sampling(model, shape, T, constants_dict):\n",

+ " b = shape[0]\n",

+ " # start from pure noise (for each example in the batch)\n",

+ " batch_xt = torch.randn(shape, device=DEVICE)\n",

+ " \n",

+ " batch_t = torch.ones(shape[0]) * T # create a vector with batch-size time the timestep\n",

+ " batch_t = batch_t.type(torch.int64).to(DEVICE)\n",

+ " \n",

+ " imgs = []\n",

+ "\n",

+ " for t in tqdm(reversed(range(0, T)), desc='sampling loop time step', total=T):\n",

+ " batch_t -= 1\n",

+ " predicted_noise = model(batch_xt, batch_t)\n",

+ " \n",

+ " batch_xt = p_sample(constants_dict, batch_xt, predicted_noise, batch_t)\n",

+ " \n",

+ " imgs.append(batch_xt.cpu())\n",

+ " \n",

+ " return imgs"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "df50675e",

+ "metadata": {},

+ "source": [

+ "## Training\n",

+ "We will instantiate every objects needed with fixed parameters here. We can try different hyperparameters by coming back here and changing the parameters."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "a3884522",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# Dataset parameters\n",

+ "batch_size = 64\n",

+ "data_path = \"/gpfsdswork/dataset/HuggingFace/fashion_mnist/fashion_mnist/\"\n",

+ "# data_path = \"fashion_mnist\" # If you're not using Jean Zay\n",

+ "train_dataloader, channels, image_size, len_dataloader = get_dataset(data_path, batch_size)"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "b6b4a2bd",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "constants_dict = get_alph_bet(T, schedule=linear_beta_schedule)"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "ba387427",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "epochs = 3\n",

+ "T = 1000 # = T"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "31933494",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "model = Unet( \n",

+ " dim=image_size,\n",

+ " init_dim=None,\n",

+ " out_dim=None,\n",

+ " dim_mults=(1, 2, 4),\n",

+ " channels=channels,\n",

+ " with_time_emb=True,\n",

+ " convnext_mult=2,\n",

+ ").to(DEVICE)\n"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "92fb2a17",

+ "metadata": {

+ "tags": []

+ },

+ "outputs": [],

+ "source": [

+ "criterion = nn.SmoothL1Loss()\n",

+ "optimizer = Adam(model.parameters(), lr=1e-4)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

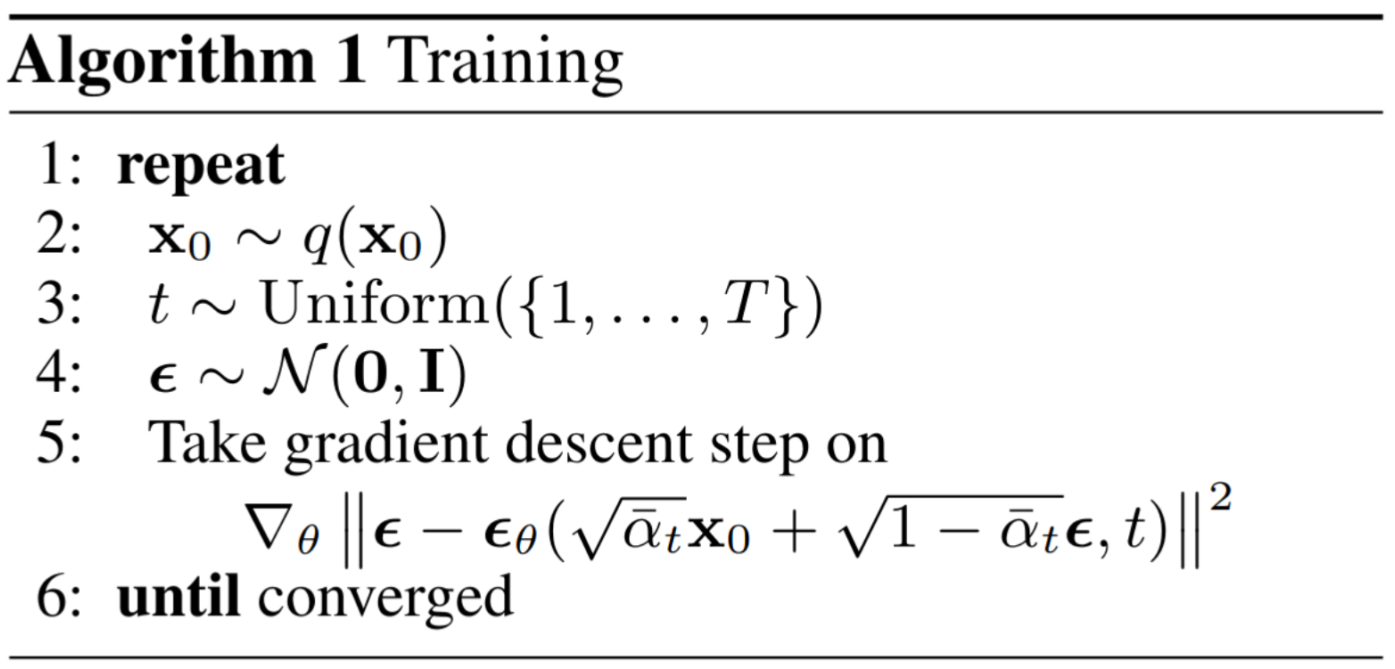

+ "id": "f059d28f",

+ "metadata": {},

+ "source": [

+ "### Training loop\n",

+ ""

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "4bab979d",

+ "metadata": {

+ "tags": []

+ },

+ "outputs": [],

+ "source": [

+ "for epoch in range(epochs):\n",

+ " loop = tqdm(train_dataloader, desc=f\"Epoch {epoch+1}/{epochs}\")\n",

+ " for batch in loop:\n",

+ " optimizer.zero_grad()\n",

+ "\n",

+ " batch_size_iter = batch[\"pixel_values\"].shape[0]\n",

+ " batch_image = batch[\"pixel_values\"].to(DEVICE)\n",

+ "\n",

+ " # Algorithm 1 line 3: sample t uniformally for every example in the batch\n",

+ " batch_t = torch.randint(0, T, (batch_size_iter,), device=DEVICE).long()\n",

+ " \n",

+ " noise = torch.randn_like(batch_image)\n",

+ " \n",

+ " x_noisy = q_sample(constants_dict, batch_image, batch_t, noise=noise)\n",

+ " predicted_noise = model(x_noisy, batch_t)\n",

+ " \n",

+ " loss = criterion(noise, predicted_noise)\n",

+ "\n",

+ " loop.set_postfix(loss=loss.item())\n",

+ "\n",

+ " loss.backward()\n",

+ " optimizer.step()\n",

+ "\n",

+ " \n",

+ "print(\"check generation:\") \n",

+ "list_gen_imgs = sampling(model, (batch_size, channels, image_size, image_size), T, constants_dict)\n",

+ "show_images(list_gen_imgs[-1])\n",

+ " "

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "id": "2489e819",

+ "metadata": {},

+ "source": [

+ "## View of the diffusion process"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "09ce451d",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "def make_gif(frame_list):\n",

+ " to_pil = ToPILImage()\n",

+ " frames = [to_pil(make_grid(normalize_im(tens_im))) for tens_im in frame_list]\n",

+ " frame_one = frames[0]\n",

+ " frame_one.save(\"sampling.gif.png\", format=\"GIF\", append_images=frames[::5], save_all=True, duration=10, loop=0)\n",

+ " \n",

+ " return IPython.display.Image(filename=\"./sampling.gif.png\")"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "id": "4f665ac3",

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "make_gif(list_gen_imgs)"

+ ]

+ },

+ {

+ "attachments": {},

+ "cell_type": "markdown",

+ "id": "bfa40b6b",

+ "metadata": {},

+ "source": [

+ "---\n",

+ "<img width=\"80px\" src=\"../fidle/img/00-Fidle-logo-01.svg\"></img>"

+ ]

+ }

+ ],

+ "metadata": {

+ "kernelspec": {

+ "display_name": "pytorch-gpu-1.13.0_py3.10.8",

+ "language": "python",

+ "name": "module-conda-env-pytorch-gpu-1.13.0_py3.10.8"

+ },

+ "language_info": {

+ "codemirror_mode": {

+ "name": "ipython",

+ "version": 3

+ },

+ "file_extension": ".py",

+ "mimetype": "text/x-python",

+ "name": "python",

+ "nbconvert_exporter": "python",

+ "pygments_lexer": "ipython3",

+ "version": "3.10.8"

+ }

+ },

+ "nbformat": 4,

+ "nbformat_minor": 5

+}

diff --git a/DDPM/model.py b/DDPM/model.py

new file mode 100755

index 0000000000000000000000000000000000000000..8a2037f1dd4b8cf2f87102b969e5279f351bc359

--- /dev/null

+++ b/DDPM/model.py

@@ -0,0 +1,267 @@

+

+# <!-- TITLE --> [DDPM2] - DDPM Python classes

+# <!-- DESC --> Python classes used by DDMP Example

+# <!-- AUTHOR : Hatim Bourfoune (CNRS/IDRIS), Maxime Song (CNRS/IDRIS) -->

+

+

+import torch

+from torch import nn, einsum

+import torch.nn.functional as F

+from inspect import isfunction

+from functools import partial

+import math

+from einops import rearrange

+

+

+def exists(x):

+ return x is not None

+

+

+def default(val, d):

+ if exists(val):

+ return val

+ return d() if isfunction(d) else d

+

+

+class Residual(nn.Module):

+ def __init__(self, fn):

+ super().__init__()

+ self.fn = fn

+

+ def forward(self, x, *args, **kwargs):

+ return self.fn(x, *args, **kwargs) + x

+

+

+def Upsample(dim):

+ return nn.ConvTranspose2d(dim, dim, 4, 2, 1)

+

+

+def Downsample(dim):

+ return nn.Conv2d(dim, dim, 4, 2, 1)

+

+

+class SinusoidalPositionEmbeddings(nn.Module):

+ def __init__(self, dim):

+ super().__init__()

+ self.dim = dim

+

+ def forward(self, time):

+ device = time.device

+ half_dim = self.dim // 2

+ embeddings = math.log(10000) / (half_dim - 1)

+ embeddings = torch.exp(torch.arange(half_dim, device=device) * -embeddings)

+ embeddings = time[:, None] * embeddings[None, :]

+ embeddings = torch.cat((embeddings.sin(), embeddings.cos()), dim=-1)

+ return embeddings

+

+

+class ConvNextBlock(nn.Module):

+ """https://arxiv.org/abs/2201.03545"""

+

+ def __init__(self, dim, dim_out, *, time_emb_dim=None, mult=2, norm=True):

+ super().__init__()

+ self.mlp = (

+ nn.Sequential(nn.GELU(), nn.Linear(time_emb_dim, dim))

+ if exists(time_emb_dim)

+ else None

+ )

+

+ self.ds_conv = nn.Conv2d(dim, dim, 7, padding=3, groups=dim)

+

+ self.net = nn.Sequential(

+ nn.GroupNorm(1, dim) if norm else nn.Identity(),

+ nn.Conv2d(dim, dim_out * mult, 3, padding=1),

+ nn.GELU(),

+ nn.GroupNorm(1, dim_out * mult),

+ nn.Conv2d(dim_out * mult, dim_out, 3, padding=1),

+ )

+

+ self.res_conv = nn.Conv2d(dim, dim_out, 1) if dim != dim_out else nn.Identity()

+

+ def forward(self, x, time_emb=None):

+ h = self.ds_conv(x)

+

+ if exists(self.mlp) and exists(time_emb):

+ assert exists(time_emb), "time embedding must be passed in"

+ condition = self.mlp(time_emb)

+ h = h + rearrange(condition, "b c -> b c 1 1")

+

+ h = self.net(h)

+ return h + self.res_conv(x)

+

+

+class Attention(nn.Module):

+ def __init__(self, dim, heads=4, dim_head=32):

+ super().__init__()

+ self.scale = dim_head**-0.5

+ self.heads = heads

+ hidden_dim = dim_head * heads

+ self.to_qkv = nn.Conv2d(dim, hidden_dim * 3, 1, bias=False)

+ self.to_out = nn.Conv2d(hidden_dim, dim, 1)

+

+ def forward(self, x):

+ b, c, h, w = x.shape

+ qkv = self.to_qkv(x).chunk(3, dim=1)

+ q, k, v = map(

+ lambda t: rearrange(t, "b (h c) x y -> b h c (x y)", h=self.heads), qkv

+ )

+ q = q * self.scale

+

+ sim = einsum("b h d i, b h d j -> b h i j", q, k)

+ sim = sim - sim.amax(dim=-1, keepdim=True).detach()

+ attn = sim.softmax(dim=-1)

+

+ out = einsum("b h i j, b h d j -> b h i d", attn, v)

+ out = rearrange(out, "b h (x y) d -> b (h d) x y", x=h, y=w)

+ return self.to_out(out)

+

+

+class LinearAttention(nn.Module):

+ def __init__(self, dim, heads=4, dim_head=32):

+ super().__init__()

+ self.scale = dim_head**-0.5

+ self.heads = heads

+ hidden_dim = dim_head * heads

+ self.to_qkv = nn.Conv2d(dim, hidden_dim * 3, 1, bias=False)

+

+ self.to_out = nn.Sequential(nn.Conv2d(hidden_dim, dim, 1),

+ nn.GroupNorm(1, dim))

+

+ def forward(self, x):

+ b, c, h, w = x.shape

+ qkv = self.to_qkv(x).chunk(3, dim=1)

+ q, k, v = map(

+ lambda t: rearrange(t, "b (h c) x y -> b h c (x y)", h=self.heads), qkv

+ )

+

+ q = q.softmax(dim=-2)

+ k = k.softmax(dim=-1)

+

+ q = q * self.scale

+ context = torch.einsum("b h d n, b h e n -> b h d e", k, v)

+

+ out = torch.einsum("b h d e, b h d n -> b h e n", context, q)

+ out = rearrange(out, "b h c (x y) -> b (h c) x y", h=self.heads, x=h, y=w)

+ return self.to_out(out)

+

+

+class PreNorm(nn.Module):

+ def __init__(self, dim, fn):

+ super().__init__()

+ self.fn = fn

+ self.norm = nn.GroupNorm(1, dim)

+

+ def forward(self, x):

+ x = self.norm(x)

+ return self.fn(x)

+

+

+class Unet(nn.Module):

+ def __init__(

+ self,

+ dim,

+ init_dim=None,

+ out_dim=None,

+ dim_mults=(1, 2, 4, 8),

+ channels=3,